Online skill ranking was one of the big features added to the Deluxe edition of INVERSUS and building it was uncharted territory on my part. It required a bunch of learning and is one of the systems players ask about the most so I’d like to share some behind the scenes details regarding how it works.

Rank Points and Skill Rating

From the player’s perspective, they earn and lose Rank Points (RP) while playing online. I wanted the Rank Points system to achieve two goals:

By inspecting a player’s RP, you can tell how challenging they are. Players with a higher RP are expected to defeat players with a lower RP.

You are awarded and deducted RP in a manner that matches your expectations. If you win, your RP should increase. If you lose your RP should decrease.

While those goals might not sound like anything special, they can actually conflict because computing a statistically accurate skill rating (the first goal) can produce results which are both confusing and do not match player expectations (the second goal).

There might be wild fluctuations as the system is first learning how you perform.

There might be counterintuitive ups and downs such as an increase in skill after an expected due the system’s confidence level in your skill increasing more than its penalty for the match.

You might not actually gain any skill after a win if you were expected to win.

To mitigate these issues, the actual skill rating of the player is decoupled from the player-facing representation of Rank Points. Before getting into how the Rank Points are calculated, let’s dig into the actual skill rating system they are built upon.

Computing The Skill Rating

The underlying estimation of player skill is built on top of the Glicko-2 rating system. For each player, it tracks an expected rating value, a standard deviation (i.e. how uncertain are we of the accuracy), and a volatility describing the expected fluctuation in match results. There are other rating systems out there – some of which are likely better – but I chose to build on top of Glicko-2 due to it being a freely available algorithm, and proven serviceable in prior games. Before digging into any code, I want to cover how the algorithm works at a high level and some problems it presents.

Glicko is built on the concept of rating periods. In an optimal scenario, players would have a bunch of matches across some period of time – let’s say a week – and then the full set of results is fed into the system which outputs new skill rating values. Each match finished during a rating period adjusts skill ratings based on how the match results differ from predictions. If the predictions were wrong, ratings are adjusted such that they are more likely to be correct in the future. Each completed match also increase the confidence in your skill rating accuracy while confidence also slowly decreases once per rating period. If you stop playing the game, it will grow more and more uncertain about where your skill rating lies because it doesn’t know if your time away was spent lounging or practicing. Until it regains confidence, your matches will have less of an effect on the ratings of other players.

But I don’t want rating periods!

The primary issue games are likely to have when integrating Glicko-2 is the rating period requirement. I want my skill to update immediately. I don’t want to wait until the end of the week. I might even only play the game during launch week! To solve this, I force a square beg into a round hole with the understanding that things will (more or less) work out fine. An INVERSUS rating period passes with each game played. If you play 10 games in one day and I only play 2 games, the math will have evaluate 5 times more rating periods for you than it did for me. It’s not optimal, but all the numbers still move in the right directions so life goes on.

If I stopped there, I would have a functional skill system, but I would lose the ability for uncertainty to grow due to inactivity. To prevent this issue, I also assign a period of real world time to a rating period (specifically 14 days). Every time a new match begins, I evaluate how long it has been since the prior match and process the appropriate amount of inactivity. When we get into the math, you’ll see that we can even do this for partial rating periods. INVERSUS evaluates inactivity at the granularity of 1 day. In other words, you need to stay offline for a full day before uncertainty starts to build.

UPDATE: Since writing this, I’ve had more thoughts on improving the rating period implementation which you can find here.

What about team vs team matches?

The next big problem with Glicko-2 relates to team games because it can only predict results between individual players. Once again, I bend the rules and coerce a team match through the system. Let’s consider some options for rating a 2v2 game where Alice and Alex defeat Betty and Bill.

Individual: You can process the match results between each pair of players. Alice would update her rating by processing a victory against Betty and a victory against Bill.

Composite Opponent: You can create a virtual opponent by averaging the skills of each player on the opposing team and then process against it. Alice would update her rating by processing a single victory against a virtual player that is the average of Betty and Bill.

Composite Team: You can create virtual players for your team and the opposing team. You then map the result between the virtual teams back onto your own rating. Alice would update her rating based on how the Alice+Alex virtual player’s rating gets modified when processing a victory against the Betty+Bill virtual player.

According to this paper, the Composite Opponent option performed the best when evaluating 5v5 matches in SoccerBots. That said, for INVERSUS, I ended up using the Composite Team approach. My reasoning was primarily based on the user experience in some corner cases and maybe a little “gut feel”.

In a 5v5 game, one player is going to have a harder time carrying a poor team to victory. This is even more true in a game design where player perform specific role with heavy cooperation such as in soccer. In contrast, team games in INVERSUS are 2v2 and rely less on role division and cooperation between the players. Because of this, it isn’t clear that the results from the listed paper actually map well onto my game design.

To discuss a more specific scenario, let’s put you in the shoes of an amazing player. You are going to be playing a 2v2 game, with a partner chosen at random, but the opposing team is fixed. In one scenario, your teammate is very low skill; in the other scenario, your teammate is as highly skilled like you. Using the Composite Opponent method, your team composition has no bearing on the change to your rating despite the fact that you are far more likely to lose with the first teammate. This is concerning because it reinforces a negative experience when you are playing alongside a lower skill player. In contrast, the Composite Team method handles this case well. Your low skill teammate will bring the rating of your whole team down and thus reduce the expectation of a victory. As a result, any losses will have a reduced impact on your rating.

This leaves us with the question of how to actually generate the virtual players for rating purposes. INVERSUS just averages the rating and deviation values of its members (which I will cover in detail later), but I suspect there might be a more optimal approach. This optimal method might also differ from game to game. It’s possible that some game designs should weight either lower skill or higher skill players more. You might event want to weight specific roles differently such as the goalie in soccer. It might also make more sense to keep the maximum deviation value from the team instead of averaging it.

What do I really need to understand?

We’re about to take a tour through my actual Glicko-2 implementation and parts are confusing. There will be some seemingly magic numbers chosen to tune the overall system and then there’s the actual math for updating the players. Do you need to know what is actually happening? I’d say yes for some parts and no for others.

I’m the type of person that wants (or maybe even needs) to understand everything from first principles, but I also had a very pressing time constraint to get this finished fast. Having a thorough understanding of an algorithm will often let you improve upon it as you adapt it to your specific needs or even find some oversight that was missed in the original work, but in this case I only went half way with my research. On the upside, by writing my own implementation, I was able to intuit some knowledge based on how the math operations would affected the output, and this led to an improved interface for my needs. I was also able to add some numerical bounds into to system such that values would never leave a range I could store in memory, could display on screen, had tested, etc.

When first learning how Glicko-2 functions, you will find this paper: Example of the Glicko-2 system. It covers the how, but not much of the why. As I mentioned, I didn’t have the luxury of getting deep into the why, but if you want to be a better engineer than I was, you can find the paper that Glicko-2 is based on here: Dynamic paired comparison models with stochastic variances.

Why the numerical bounds?

My Glicko-2 implementation applys bounds to each player value: rating, deviation and volatility. This serves three purposes:

Numbers are prevented from growing larger or smaller than memory supports. Limits on rating and deviation are chosen such that packing these values for transfer over the network is a safe process.

Numbers should remain in a range that the user interface can support. While I don’t display any of these values directly, they do influence the player-facing Rank Points and I want those to live in an expected range (e.g. never negative).

It’s nice to have a safety net when a system is this complex and you don’t claim to be an expert in its field. I did a bunch of simulated tests to make sure ratings evolved in a reasonable manner, but I was not confident in saying that things will 100% never explode due to some bug or edge case I hadn’t considered.

Technically, ratings can grow unbounded if more and more new players are allowed to enter the system and some devoted super player is always there to waiting to pounce once they build up enough skill to be fed on. In practice, that isn’t likely to ever happen. The minimum and maximum skill ratings supported by INVERSUS were chosen to compliment the state of an unrated player.

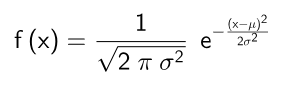

When a new player enters the system, they are given an initial expected rating, deviation and volatility. These values are chosen to imply that the player is probably average, but could also be the best player in the world or the worst. The new player is given an expected rating of μ = 0 and a standard deviation of σ = 2.015 (the Glicko recommended value). These parameters define what is called a normal distribution by using the following formula.

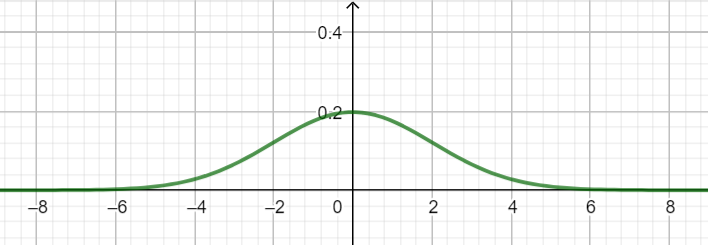

This graphs out a bell curve that can be used to evaluate the probability that a player’s skill is within a given range. To be more specific if you compute the area between two input values (the definite integral), you will get a probability. Here is the graph for the initial player settings of μ = 0 and σ = 2.015.

If μ (the expected rating) was adjusted up and down, the hump of the curve would move right and left accordingly. If σ (the standard deviation) was decreased, it would represent more confidence and the hump would raise up. In contrast, increasing σ would represent less confidence and the curve would flatten out.

To determine how likely the player’s skill is in the range of -2 to 2, you can evaluate the area under the graph within that range. In this case, it would be about 0.68 which means there is a 68% chance. If you were to evaluate between negative and positive infinity, you would get exactly 1.0 or 100% as expected.

So how does this help define minimum and maximum bounds for the rating system? If we assume that my choice of standard deviation for a new player is a good representation of the probability of any skill rating across the entire population, the curve can tell us minimum and maximum values that are highly unlikely for anyone to reach. In