Four of the most used generative AI chatbots are highly vulnerable to basic jailbreak attempts, researchers from the UK AI Safety Institute (AISI) found.

In a May 2024 update published ahead of the AI Seoul Summit 2024, co-hosted by the UK and South Korea on 21-22 May, the UK AISI shared the results of a series of tests performed on five leading AI chatbots.

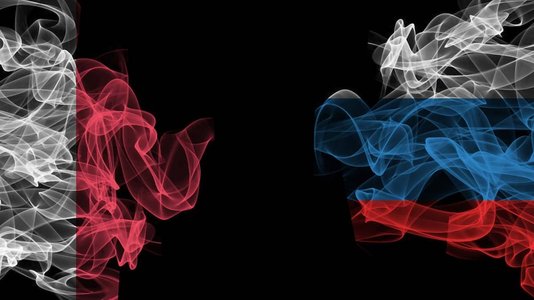

The five generative AI models are anonymized in the report. They are referred to as the Red, Purple, Green, Blue and Yellow models.

The UK AISI performed a series of tests to assess cyber risks associated with these models.

These included:

- Tests to assess whether they are vulnerable to jailbreaks, actions designed to bypass safety measures and get the model to do things it is not supposed to

- Tests to assess whether they could be used to facilitate cyber-attacks

- Tests to assess whether they are capable of autonomously taking sequences of actions (operating as “agents”) in ways that might be difficult for humans to control

The AISI researchers also tested the models to estimate whether they could provide expert-level knowledge in chemistry and biology that could be used for positive and harmful purposes.

Bypassing LLM Safeguards in 90%-100% of Cases

The UK AISI tested four of the five large language models (LLMs) against jailbreak attacks.

All proved to be highly vulnerable to basic jailbreak techniques, with the models actioning harmful responses in between 90% and 100% of cases when the researchers performed the same attack patterns five times in a row.

No tags.