I’m going to look at the history of the science of human perception science leading up to the modern video standards and also try to explain some of the terminology that is commonly used. I’ll also briefly touch on how the pipeline of a typical game will be more and more like the one used in the motion picture industry.

Pioneers of color perception

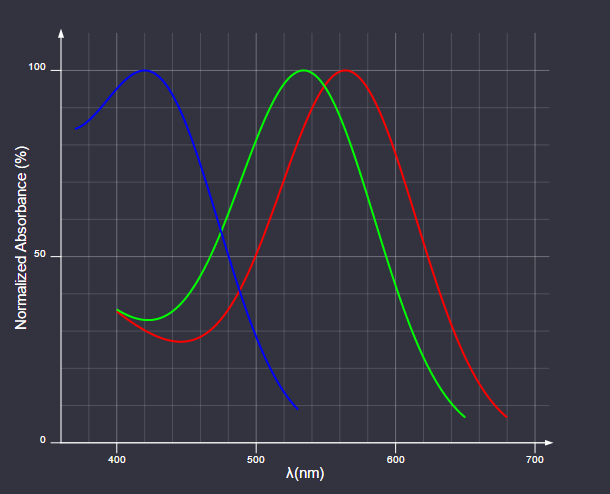

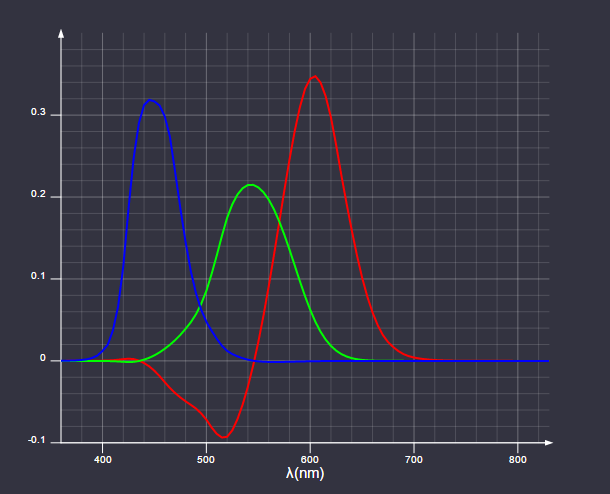

We know today that the human retina contains three different kinds of photoreceptive cells called cones. The three kinds of cones each contain a member of the protein family known as photopsins which absorb light in different parts of the spectrum:

Photopsins absorbance

The cones corresponding to the red, green, and blue parts of the spectrum are also often referred to as long(L), medium(M), and short(S), indicating which wavelengths they are most receptive too.

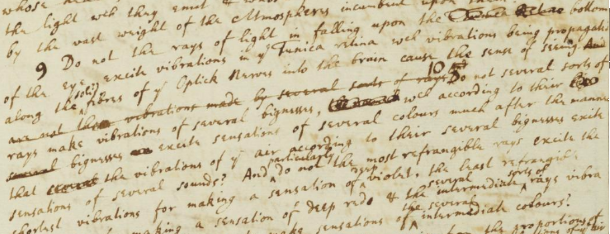

One of the earliest treatise of the interaction of light and the retina can be found in “Hypothesis Concerning Light and Colors” by Isaac Newton, probably written sometime around 1670-1675. Newton theorized that light of various wavelengths caused the retina to resonate at the same frequencies and that these vibrations were then propagated through the optic nerve to the “sensorium”.

Do not the rays of light in falling upon the bottom of the eye excite vibrations in the Tunica retina which vibrations being propagated along the fibres of the Optick nerves into the brain cause the sense of seeing. Do not several sorts of rays make vibrations of several bignesses which according to their bignesses excite sensations of several colours…

(I highly recommend having a look at the scans of Newton’s original drafts available at the Cambridge University web site. Talk about stating the obvious, but what a frigging genius!)

More than a hundred years later Tomas Young speculated that since resonance frequency is a system dependent property, in order to capture light of all frequencies there would need to be an infinite number of different resonance systems in the retina. Young found this unlikely and further speculated that the number was limited to one system for red, yellow, and blue, the colors traditionally used in subtractive paint mixing. In his own words:

Since, for the reason here assigned by Newton, it is probable that the motion of the retina is rather of a vibratory than of an undulatory nature, the frequency of the vibrations must be dependent on the constitution of this substance. Now, as it is almost impossible to conceive each sensitive point of retina to contain an infinite number of particles, each capable of vibrating in perfect unison with every possible undulation, it becomes necessary to suppose the number limited, for instance to the three principal colours, red, yellow, and blue…

Young’s assumptions about the retina were not correct but he still landed in the right conclusion: that there is a finite number of categories of cells in the eye.

In 1850 Hermann von Helmholtz became the first to produce experimental evidence for Young’s theory. Helmholtz asked a test subject to match the colors of a number of reference light sources by adjusting the intensity of a set of monochromatic light sources. He came to the conclusion that three light sources, one in the red, one in the green, and one in the blue part of the spectrum, were necessary and sufficient to match all the references.

Birth of modern colorimetry

Fast forward to the early 1930s. By this time the scientific community had a pretty good idea of the inner workings of the eye (although it would take an additional 20 years before George Wald was able to experimentally verify the presence and workings of rhodopsins in the retinal cones, a discovery which would lead to him sharing the Nobel prize in Medicine in 1967). The Commission Internationale de L'Eclairage (International Commission on Illumination), CIE, set out to create a comprehensive quantification of the human perception of color. The quantification was based on experimental data collected by William David Wright and John Guild through a setup similar to the one pioneered by Hermann von Helmholtz. The primaries in the experiment were set to 435.8 nm for blue, 546.1 nm for green, and 700 nm for red respectively.

John Guild’s experimental setup, the three knobs adjust the primaries

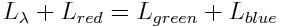

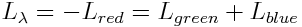

Because of the significant overlap in sensitivity of the M and L cones, it was impossible to match some wavelengths in the blue to green part of the spectrum. In order to “match” these colors some amount of the red primary needs had to be added to the reference so that:

If we imagine for a moment that we can have a negative contribution from the primaries, this can be rewritten as:

The result of the experiments was a table of RGB triplets for each wavelength which when plotted looked like this:

CIE 1931 RGB color matching functions

Colors with a negative red component are of course not possible to display using the CIE primaries.

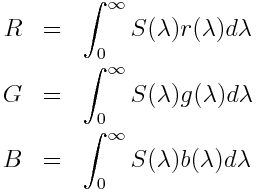

We can now find the trichromatic coefficients for light of a given spectral power distribution, S, as the following inner product:

It might seem obvious that the response to the various wavelengths can be integrated in this way, but it is actually dependent on the physical response of the eye being linear in wavelength response. This was empirically verified by Herman Graßmann in 1853 and the integrals above are the modern formulation of what is now known as Graßmann’s law.

The name color space comes from the fact that the red, green, and blue primaries can be thought of as the basis of a vector space. In this space the various colors that humans can perceive are represented by rays through the origin. The modern definition of a vector space was introduced by Giuseppe Peano in 1888, but James Clerk Maxwell had already more than 30 years before this used the fledgling theories of what would later become linear algebra to create a formal description of the trichromatic color system.

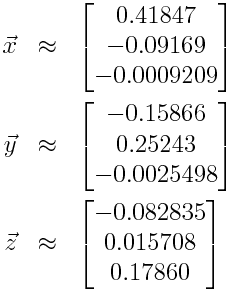

For computational reasons the CIE decided that it would be more convenient to work with a color space where the primary coefficients were always positive. Expressed in coordinates of the RGB color space the three new primaries were:

This new set of primaries can’t be realized in the physical world and is only a mathematical tool to make the color space easier to work with. In addition to making sure the primary coefficients were positive, the new space was also arranged so that the Y coefficient of a color corresponds to its perceived brightness, this component is known as the CIE luminance (read Charles Poynton’s excellent Color FAQ for more detail).

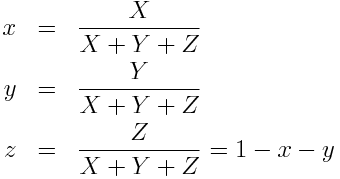

To make the resulting color space easier to visualize we can make one final transformation. By dividing each component with the sum of the components we end up with a dimensionless expression of a color that is independent of its brightness:

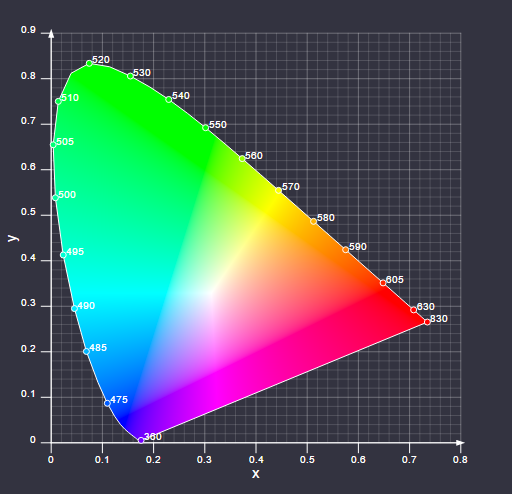

The x and y coordinates are known as the chromaticity coordinates, and together with the CIE luminance Y they form the CIE xyY color space. If we plot the chromaticity coordinates for all colors with a given luminance, we end up with the following diagram which is probably familiar:

CIE 1931 xyY diagram

One final concept that is useful to know about is what’s referred to as the white point of a color space. For a given display system, the white point is the x and y coordinates of the color you get when you set the coefficients of your RGB primaries to the same value.

Over the years several new color spaces which in various ways improves on CIE 1931 ones. Despite this the CIE xyY system remains the most commonly used color space to describe the properties of display devices.

Transfer Functions

Before looking at a few video standards, there are two additional concepts that we need to introduce and explain.

Optical-Electronic Transfer Function

The optical-electronic transfer function (OETF) specifies how linear light captured with some device (camera) should be encoded in a signal, i.e. it is a function of the form:

Historically V would be an analog signal but nowadays it is of course encoded digitally. As a typical game developer you’ll likely not have to concern yourself with EOTFs that often; the one scenario where it would be important is if you plan to mix live action footage with CGI in your game. In this case it is necessary to know what EOTF your footage was captured with so that you can recover the linear light and correctly mix it with your generated imagery.

Electronic-Optical Transfer Function

The electronic-optical transfer (EOTF) function does the opposite thing of the OETF, i.e. it specifies how a signal should be converted to linear light:

This function will be more important for us as game developers since it specifies how the content we author will appear on our customers TVs and monitors.

Relation between EOTF and OETF

Though they are related concepts the EOTF and OETF serve different purposes. The purpose of the OETF is to give us a representation of a captured scene from which we can reconstruct the original linear light (this representation is conceptually the same thing as the HDR framebuffer of your typical game). What will happen in a typical movie production pipeline is then: