AI Agents hold great promise for IT ticketing services, but they also bring with them new risks.

Researchers from Cato Networks have revealed that a new AI agent protocol released by Atlassian, a service desk solutions provider, could allow an attacker to submit a malicious support ticket through Jira Service Management (JSM) with a prompt injection.

This proof-of-concept (PoC) attack conducted out by Cato’s team has been dubbed a ‘Living off AI’ attack.

The researchers outlined the technical overview of the PoC attack in a new report shared exclusively with Infosecurity by the Cato CTRL Threat Research team on June 19.

MCP, the New Standard AI Agent Protocol

In May 2025, Atlassian launched its own Model Context Protocol (MCP) server, which embeds AI into enterprise workflows.

MCP is an open standard introduced in November 2024 by Anthropic, the maker of several generative AI models and the AI chatbot Claude.

MPC servers are used to manage and leverage contextual information within a model’s operation.

The MCP architecture consists of an MCP host running locally and several MCP servers. The host, which acts as the agent, can be an AI-powered application (e.g. Claude Desktop), the large language model you’re running on your device (e.g. Claude Sonnet) or an integrated development environment (IDE) like Microsoft’s Visual Studio.

Atlassian’s MCP enables a range of AI-driven actions, including ticket summarization, auto-replies, classification and smart recommendations across JSM and Confluence.

It also enables support engineers and internal users to interact directly with AI from their native interfaces.

‘Living Off AI’ Attack Explained

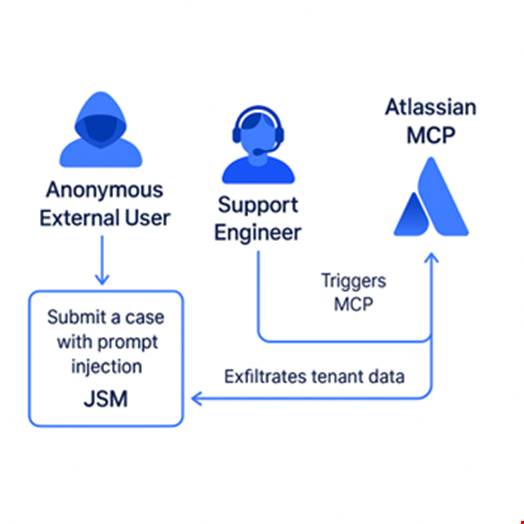

The Cato CTRL researchers performed a PoC attack on Atlassian JSM by using Atlassian’s MCP assets to show how an anonymous external user, connected via JSM, could perform a range of malicious actions, including:

- Triggering an Atlassian MCP interaction by submitting a case, which is later processed by a support engineer using MCP tools like Claude Sonnet, automatically activating the malicious flow

- Inducing the support engineer to unknowingly execute the injected instructions through the Atlassian MCP

- Gaining access to internal tenant data from JSM that should never be visible to external threat actors

- Exfiltrating data from a tenant that the support engineer is connected to, simply by having the extracted data written back into the ticket itself

Here’s how the typical attack chain would unfold:

- An external user submits a crafted support ticket

- An internal agent or automation, linked to a tenant, invokes an MCP-connected AI action

- A prompt injection payload in the ticket is executed with internal privileges

- Data is exfiltrated to the threat actor’s ticket or altered within the internal system

- Without any sandboxing or validation, the threat actor effectively uses the internal user as a proxy, gaining full access

“Notably, in this PoC demo, the threat actor never accessed the Atlassian MCP directly. Instead, the support engineer acted as a proxy, unknowingly executing malicious instructions,” the Cato CTRL researchers said.

Prompt injection via Jira Service Management. Source Cato CTRL Threat Research

Prompt injection via Jira Service Management. Source Cato CTRL Threat Research

While Atlassian was used to demonstrate the ‘Living Off AI’ attack, the Cato researchers believe that any environment where AI executes untrusted input without prompt isolation or context control is exposed to this risk.

“The risk we demonstrated is not about a specific vendor—it's about a pattern. When external input flows are left unchecked with MCP, threat actors can abuse that path to gain privileged access without ever authenticating,” they said

“Many enterprises are likely adopting similar architectures, where MCP servers connect external-facing systems with internal AI logic to improve workflow efficiency and automation. This design pattern introduces a new class of risk that must be carefully considered.”

Recommended Mitigation Measure

Cato Networks recommended that users seeking to prevent or mitigate this type of attack create a rule to block or alert on any remote MCP tool calls, such as create, add or edit. Such a measure will allow the users to:

- Enforce least privilege on AI-driven actions

- Detect suspicious prompt usage in real time

- Maintain audit logs of MCP activity across the network

This report comes two days after Asana announced a bug in its MCP server that exposed customer data to other organizations.

Photo credits: Konstantin Savusia/Michael Vi/Shutterstock

Read now: Security Experts Flag Chrome Extension Using AI Engine to Act Without User Input

No tags.