[This post originally appeared on my blog]

Urza’s Dream Engine is a neural network project I’ve been working on for a few months. It’s a bot that creates art in the style of Magic: The Gathering cards. It began as an effort to create art to go with the cards created by the amazing Twitter bot, RoboRosewater, and grew into its own beast. It was my first foray into machine learning as well as my first project focused on producing still images.

You can see some of the output on the site for the project here: http://andymakes.com/urzasdreamengine/

Although Urza’s Dream Engine grew a life of its own, it culminated in a booster draft, held at Babycastles in NYC on Nov 18th 2017, using the RoboRosewater cards paired with my bot’s art. This was a game played by humans, but designed and illustrated by machines. In this post I’ll go through my process step by step starting from the initial idea to the Babycastles event.

The Urza’s Dream Engine site has downloads for the images, cards, and print-and-play booster packs used at the Babycastles event.

Conception

This whole project started because I was enamored with a twitter bot called @RoboRosewater. I certainly wasn’t the only one; as of this writing, the account has 23,500 followers. RoboRosewater is a neural network bot created by Chaz and Reed that has been running since 2015. Each day it posts a new Magic: The Gathering card generated by a machine learning algorithm. Basically, it is a program that has been trained on all of the existing MTG cards (roughly 30,000 over the course of the game’s two decade run) and which now attempts to create new ones in the same vein. The bot itself only generates the text for these cards.

Most of the cards it makes are borderline nonsense (but still very fun), but a surprising amount of the output is actually playable. Maybe broken in the sense that the card is too strong or too weak, but actually legal within the (fairly complex) rules of the game. Even these legal cards are still alien, like a glimpse into a bizarre alternate reality of the game. Some are amusingly pointless (like a card that states that players must pay the cost of the spells they cast). Others staple three seemingly unrelated abilities together. And some have genuinely interesting effects that as far as I know have never been used in the game’s long run. If you like Magic or bots I highly recommend following this account.

Given my interest in exploring unusual parts of the game in my Weird: MTG series, holding a RoboRosewater booster draft seemed like a natural fit. I had something of a convenient problem though: the cards posted on the RoboRosewater account use stock images of computers as their art. They basically all looked the same, and trying to play with them would be hugely difficult as card art is an important identifying feature, especially when playing with a new set. Imagine having to read 20 identical cards on a table every time you are thinking about your next move.

I say a “convenient” problem because I am usually keen to try a new project, and the challenge of creating illustrations for these cards sounded exciting. The text was generated via automation, so I knew the images should be as well. Early on I thought about trying to create an openFrameworks sketch that would cut up and recombine the existing art from the game, but it didn’t feel quite right. The cards were created by a neural network so the art should be as well. I’d never messed around with neural networks myself, but I was as blown away by Google’s Deep Dream algorithmas anybody else so now seemed like the time to learn!

Getting Started

I’m a total script kiddie when it comes to machine learning. People get their PhDs creating and working with these techniques. I’m a game designer who likes to tinker. As a result, I tried setting up a lot of different neural nets on my laptop (non-GPU enhanced, but I wasn’t going to let that stop me). With most, I ran into some roadblock after 5 or 6 hours that couldn’t be solved by importing a new Python library, and I’d move on to the next one. Finally I had success with a Tensorflow implementation of Deep Convolutional Generative Adversarial Networks by Taehoon Kim. I followed the instructions on the GitHub page and trained the bot on some sample images of faces. After tweaking some of the source code to work on my machine, I produced a set of my own slightly-off faces and I was off to the races.

Getting the Data

Part of how neural networks function is that before they can attempt to replicate a style, they must first train on an existing dataset to learn what the thing is. This data set is no trivial thing. In order to work well, the data must be ordered (being of similar type and presented in the same way) and there must be a lot of it. The sample set that came with Kim’s Tensorflow implantation contained 200,000 images of celebrities–all from the neck up, facing the camera and cropped to be the same size.

Fortunately, the sheer volume of data associated with Magic: The Gathering is huge, probably rivaled only by major sports like baseball. It is the first & longest running collectible card game. It first launched in 1993, and Wizards of the Coast, the company that produces it, has released multiple new sets every year since then. This means that there are currently over 30,000 unique Magic cards. This pool, while small in terms of what would be best for neural net generated art, is still larger than any other game I could hope for. Of course, the art in the game is also not nearly as uniform as the celebrity data set I described above, but I didn’t need perfect output. I was ok with things getting weird and I wanted to see what the bot could do.

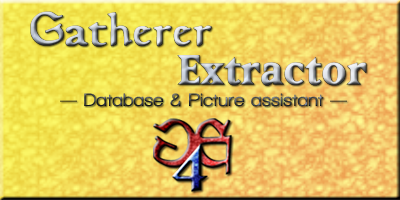

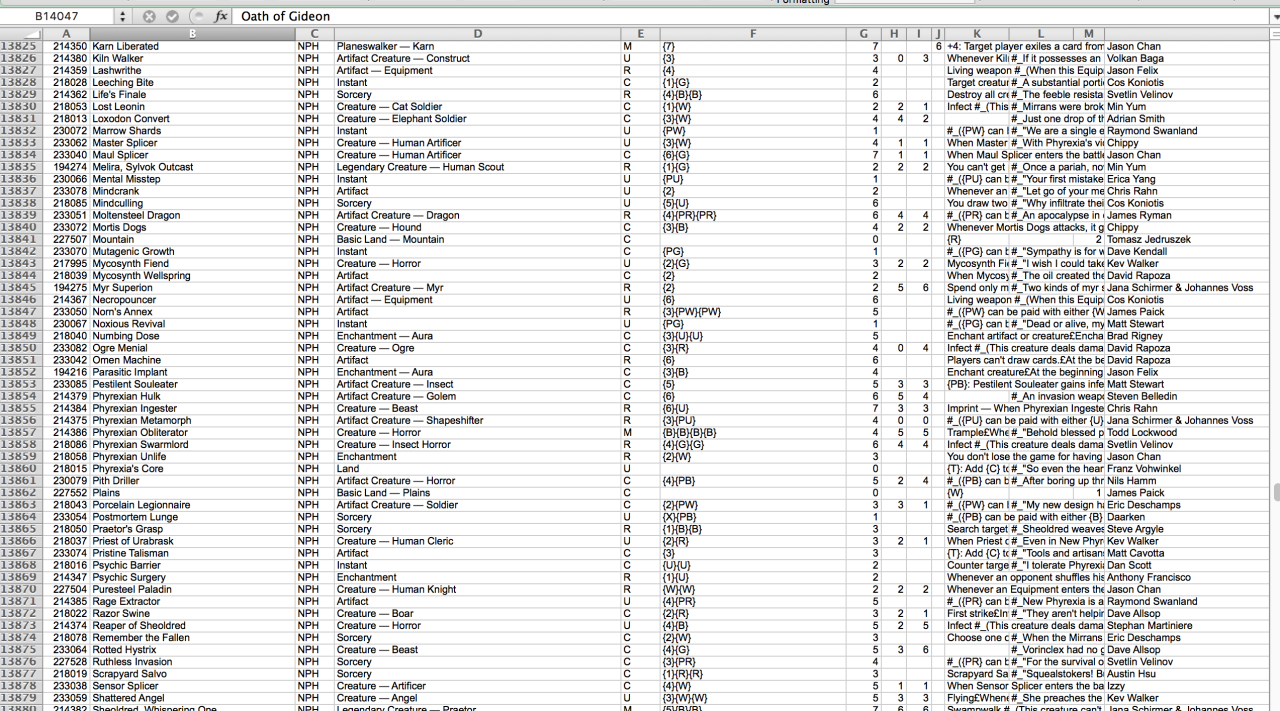

First things first, I needed to get that data. Luckily, I am not the first person who wanted to scrape info from all existing MTG cards. There is a piece of software called Gatherer Extractor that will download text and images within certain parameters from Wizard’s official MTG online card database, Gatherer. This software, release for free by MTG Salvation user chaudakh, prepares an Excel spreadsheet file with all of the requested information and can also download the card-scans (the image showing the full card). To my delight it also had a check-box to crop the card image to just the art, saving me a Python or openFrameworks script to do the same thing.

I tested Gatherer Extractor with a single expansion set, and after seeing that it did what I wanted, I set it to work pulling the card text and image of every card in the game. The resulting spreadsheet makes my computer chug a little bit, but it had everything, cross-referenced with the image associated with each card.

Sorting

Card art in Magic: The Gathering is dependent on a lot of things, but there are some game factors that are frequent indicators of aspects of the illustration. Card color is a big one. Red cards tend to lean toward warm colors in their palette, blue cards toward cool colors, etc. Creatures also tend to look different than artifacts, which look different that sorcery spells (or so I thought–more on that in a bit).

Initially I had hoped to have the bot simply train on all of the card text and art so that it could learn the difference between, say, a red creature and a white enchantment itself, but I soon realized that this was more than I could do with my setup (or at least with my experience level). It became clear that the best course of action was to sort the art beforehand by whatever metric I wanted to use, and then have the bot train only on those images. To do this, I built a simple Python script that would let me set some parameters (for instance “blue creatures” or “enchantments”) and would then scan the spreadsheet to find all cards that met the criteria. For each card that fulfilled the search query, the image associated with that card was copied to a new folder to use as the training set.

Early Batches

The first training set I tried was all creatures with the “goblin” creature type. This resulted in a little under 400 images for the bot to train on. After a few hours the results were still rubbish. After more experimentation I would learn that I had made two errors: 400 images was a minuscule dataset, and a few hours was not nearly enough time to train, even with a good data set.

I switched gears and tried white creature cards, figuring that color was the major indicator of the palette used in the art and that creatures would at least all have a main subject in the art (as opposed to sorceries or enchantments). I let it run for four days this time. As it worked, it would spit out a sample image every hour or so. I was able to watch a formless mess turn into something more discernible. (The sample images are produced in an 8x8 grid, so each sheet contains 64 separate images produced by the bot).

This bird-fish giant was the first image produced by Urza’s Dream Engine (then unnamed) that I really loved. It’s still one of my favorites.

At this point, I knew things were working. I messed around with the settings for other groups, but as far as the code I was using, very little changed. The biggest modification was that I shifted from breaking up the images by card type and started using color as the only criteria for sorting. Contrary to my initial assumption, I realized that nearly every card in Magic has a central figure in the art, not just the creature cards. In order to illustrate the magical effect of a spell or enchantment the art shows a central figure either reaping the benefits of or being victimized by the spell in question. Since there was little artistic difference between them, there was no reason to make smaller training sets.

These three cards all represent spells in the game, but the art could just as easily go with a creature, since each one focuses on a character.

Creating and Posting

The next two months or so were spent with the bot quietly running in the background of my laptop (and absolutely destroying my battery life when unplugged). I worked on other projects, most notably finishing up my sequencer toy Bleep Space with musician Dan Friel. During this time I occasionally posted the images the bot created on this Tumblr and on Twitter. The images resonated with a lot of folks and I always enjoyed hearing what people saw in them. The images are abstract enough that while conveying the general vibe of MTG art, they function a bit like a Rorschach test, allowing for many interpretations.

In order to make the images a bit more human-readable, I used a few Photoshop actions that I could run as a batch. The main one I used took the 8x8 grid of images that the machine learning bot spat out and cut the image into 64 individual images. These images were then blown up slightly to be a bit easier to see and so that they would eventually fit nicely onto the RoboRosewater card frames.

Around this time, the images were featured in an article on Waypoint (The games culture wing of Vice) by Cameron Kunzelman. As I noted in the article, one of the things I was enjoying most about the bot was that it was generating images that seemed to have some of the flowing style of Rebecca Guay, my favorite illustrator for MTG.

On top are Guay’s illustrations for Bitterblossom and Regenerate. On the bottom are two of the pieces created by Urza’s Dream Engine.

On top are Guay’s illustrations for Bitterblossom and Regenerate. On the bottom are two of the pieces created by Urza’s Dream Engine.

Creating The RoboDraft

I was collecting and displaying the images, but I still wanted to do something with them. My background is in interaction, and that hasn’t changed. My original plan was to pair my images with Roborosewater cards and hold a booster draft with them, and that was still what I wanted to do. My previous Weird MTG events had been much simpler (a booster draft using card